|

|

|

| Conjugate guided gradient (CGG) method for robust inversion and its application to velocity-stack inversion |  |

![[pdf]](icons/pdf.png) |

Next: CGG with residual and

Up: Conjugate-Guided-Gradient (CGG) method

Previous: CGG with residual weight

Another way to modify the gradient direction

is to modify the gradient vector after the gradient is computed

from a given residual.

Since the gradient vector is in the model space,

any modification of the gradient vector imposes

some constraint in the model space.

If we know some characteristics of the solution

which can be expressed in terms of weighting in the solution space,

we can use the weight to redirect the gradient vector by applying the weight to it.

Again, by keeping the forward operator unchanged,

we don't need to recompute the residual when the weight has changed.

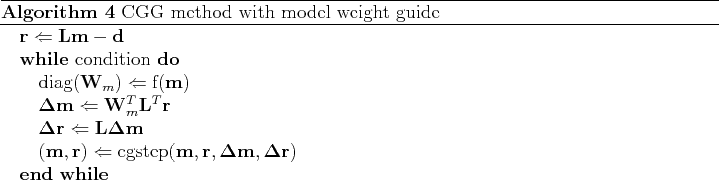

This algorithm can be implemented as shown in Algorithm 4.

Even though the model weighting has

different meaning from from residual weighting in the inversion result,

the analyses are similar in both cases.

As we redefined the contribution of each residual element

by weighting it with the absolute value of itself to some power,

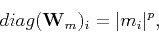

we can do the same thing with each model element in the solution,

|

(10) |

where  is a real number that depends on the problem we wish to solve.

If the operator used in the inversion is close to unitary,

the solution obtained after the first iteration already

closely approximates the real solution.

Therefore, weighting the gradient

with some power of the absolute value of the previous

iteration means that we down-weight the importance of small model values

and improve the fit to the data by emphasizing model components

that already have large values.

is a real number that depends on the problem we wish to solve.

If the operator used in the inversion is close to unitary,

the solution obtained after the first iteration already

closely approximates the real solution.

Therefore, weighting the gradient

with some power of the absolute value of the previous

iteration means that we down-weight the importance of small model values

and improve the fit to the data by emphasizing model components

that already have large values.

|

|

|

| Conjugate guided gradient (CGG) method for robust inversion and its application to velocity-stack inversion |  |

![[pdf]](icons/pdf.png) |

Next: CGG with residual and

Up: Conjugate-Guided-Gradient (CGG) method

Previous: CGG with residual weight

2011-06-26